|

ARI711S - ARTIFICIAL INTELLIGENCE - 1ST OPP - JUNE 2022 |

|

1 Page 1 |

▲back to top |

nAmlBIA unlVERSITY

OF SCIEnCE Ano TECHnOLOGY

FACULTY OF COMPUTING AND INFORMATICS

DEPARTMENTOF COMPUTERSCIENCE

QUALIFICATION: BACHELOR OF COMPUTER SCIENCE

QUALIFICATION CODE: 07BACS

COURSE: ARTIFICIAL INTELLIGENCE

DATE: JUNE 2022

DURATION: 3 HOURS

LEVEL: 7

COURSE CODE: ARl711S

PAPER: THEORY

MARKS: 93

EXAMINER(S)

MODERATOR:

FIRST OPPORTUNITY EXAMINATION QUESTION PAPER

Prof. JOSE QUENUM

Mr STANTIN SIEBRITZ

INSTRUCTIONS

1. Answer ALL the questions.

2. Read all the questions carefully before answering.

3. Number the answers clearly

THIS QUESTION PAPER CONSISTS OF 3 PAGES

(Excluding this front page)

PERMISSIBLE MATERIALS

CALCULATOR

|

2 Page 2 |

▲back to top |

ARl7115

Second Exam (continued)

July 2022

Question 1 ..................................................................

[25 points]

(a) Consider the blocks world. The blocks can be on a table or in a box. Consider three

[15]

generic actions: a0, a1, and a2 described as follows:

a0 : when applied to a block, will keep it in the box;

a1 : when applied to a block, will move it on the table;

a2: when applied to two blocks, will move the first one on top ofthe

second one.

Consider the following four states in the system:

S0 : all blocks are in the box, no block is on the table;

S1: only block Bis on the table; all other blocks are in the box;

S2: both blocks B and Care on the table, with Con top of B;

S3: blocks B, C and Dare on the table, with Don top of C and Con top of B.

Furthermore, additional information is provided in Table 1, where each state has a re-

ward, possible actions and a transition model for each action. Note that for a given ac-

tion, the probability values indicated in its transition model all sum up to 1.

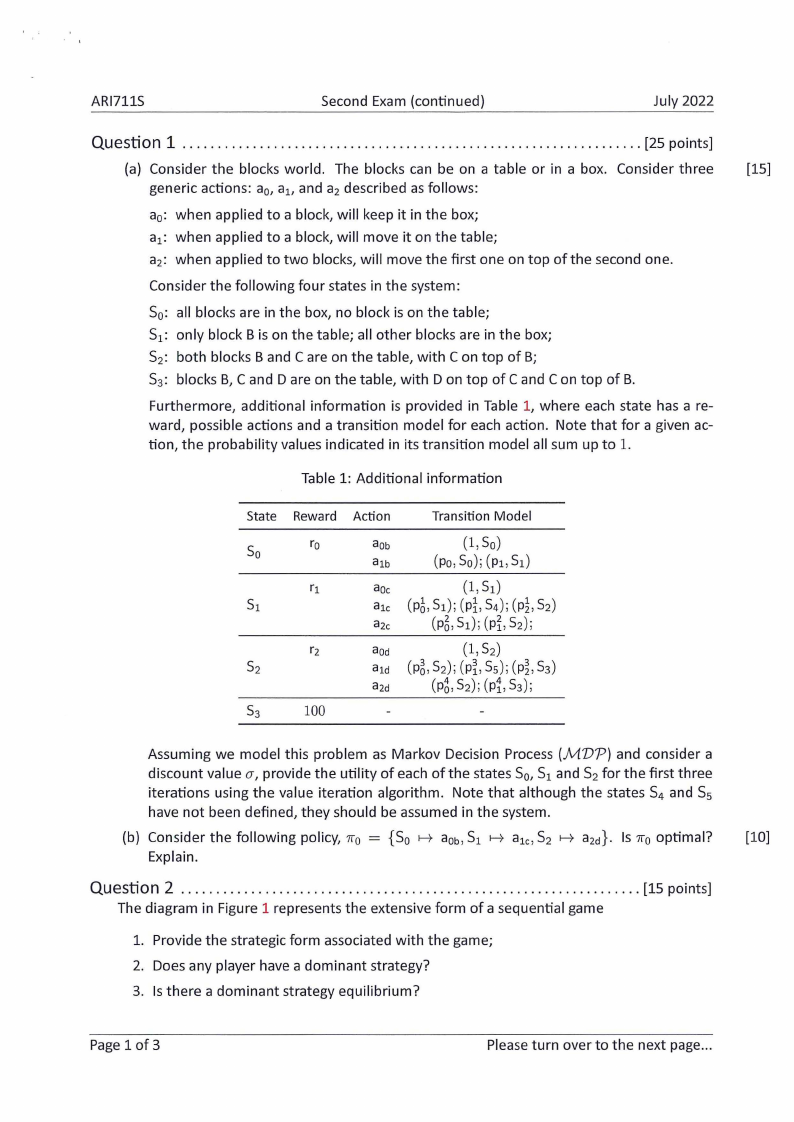

Table 1: Additional information

State Reward Action

Transition Model

So

ro

aob

(1, So)

a1b

(Po,So); (Pi, S1)

r1

S1

aoc

(1, S1)

a1c (p5,S1);(pf,S4);(P½,S2)

a2c

(p5, S1); (Pi, S2);

r2

S2

aod

(l,S2)

a1d (p5,S2);(Pi,Ss);(p~,S3)

a2d

(P6,S2); (p1, S3);

S3

100

Assuming we model this problem as Markov Decision Process (MVP) and consider a

discount value CY, provide the utility of each of the states S0, S1 and S2 for the first three

iterations using the value iteration algorithm. Note that although the states S4 and S5

have not been defined, they should be assumed in the system.

(b) Consider the following policy, n0 = {So H aob,S1 H a1c, S2 H a2d}- Is n0 optimal?

[10]

Explain.

Question 2 ..................................................................

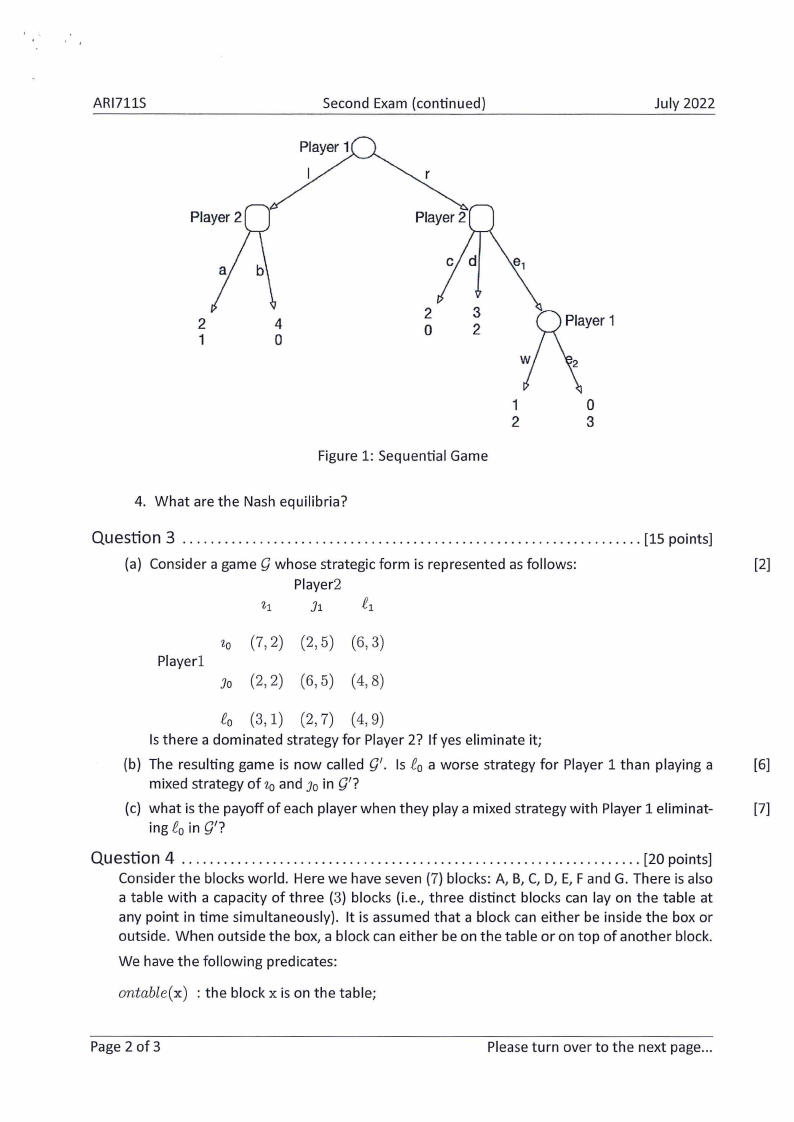

The diagram in Figure 1 represents the extensive form of a sequential game

[15 points]

1. Provide the strategic form associated with the game;

2. Does any player have a dominant strategy?

3. Is there a dominant strategy equilibrium?

Page 1 of 3

Please turn over to the next page ...

|

3 Page 3 |

▲back to top |

ARl711S

Second Exam (continued)

July 2022

2

4

1

0

1

0

2

3

Figure 1: Sequential Game

4. What are the Nash equilibria?

Question 3 ..................................................................

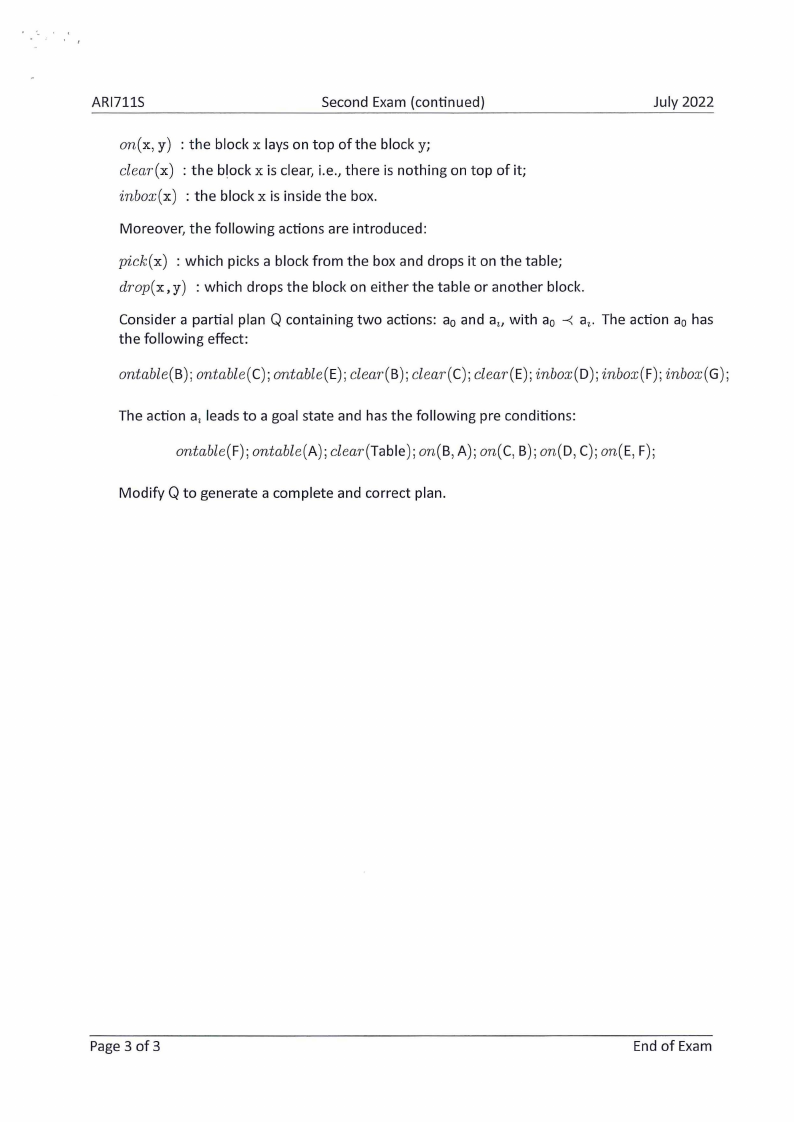

(a) Consider a game g whose strategic form is represented as follows:

Player2

[15 points]

[2]

Zo (7, 2) (2, 5) (6, 3)

Playerl

Jo (2, 2) (6, 5) (4, 8)

f 0 (3, 1) (2, 7) (4, 9)

Is there a dominated strategy for Player 2? If yes eliminate it;

(b) The resulting game is now called ()'. Is f 0 a worse strategy for Player 1 than playing a

[6]

mixed strategy of z0 and Jo in ()'?

(c) what is the payoff of each player when they play a mixed strategy with Player 1 eliminat-

[7]

ing f 0 in ()'?

Question 4 ..................................................................

[20 points]

Consider the blocks world. Here we have seven (7) blocks: A, B, C, D, E, F and G. There is also

a table with a capacity of three (3) blocks (i.e., three distinct blocks can lay on the table at

any point in time simultaneously). It is assumed that a block can either be inside the box or

outside. When outside the box, a block can either be on the table or on top of another block.

We have the following predicates:

ontable(x) : the block xis on the table;

Page 2 of 3

Please turn over to the next page...

|

4 Page 4 |

▲back to top |

ARl711S

Second Exam (continued)

July 2022

on(x, y) : the block x lays on top of the blocky;

clear(x) : the block xis clear, i.e., there is nothing on top of it;

inbox(x) : the block xis inside the box.

Moreover, the following actions are introduced:

pick(x) : which picks a block from the box and drops it on the table;

drop(x, y) : which drops the block on either the table or another block.

Consider a partial plan Q containing two actions: a0 and a21 with a0 --<at. The action a0 has

the following effect:

ontable(B); ontable(C);ontable(E);clear(B); clear(C);clear(E);inbox(D);inbox(F);inbox(G);

The action at leads to a goal state and has the following pre conditions:

ontable(F);ontable(A); clear(Table); on(B, A); on(C, B); on(D, C); on(E, F);

Modify Q to generate a complete and correct plan.

Page 3 of 3

End of Exam