|

PBT602S - PROBABILITY THEORY 2 - 1ST OPP - JUNE 2022 |

|

1 Page 1 |

▲back to top |

ry

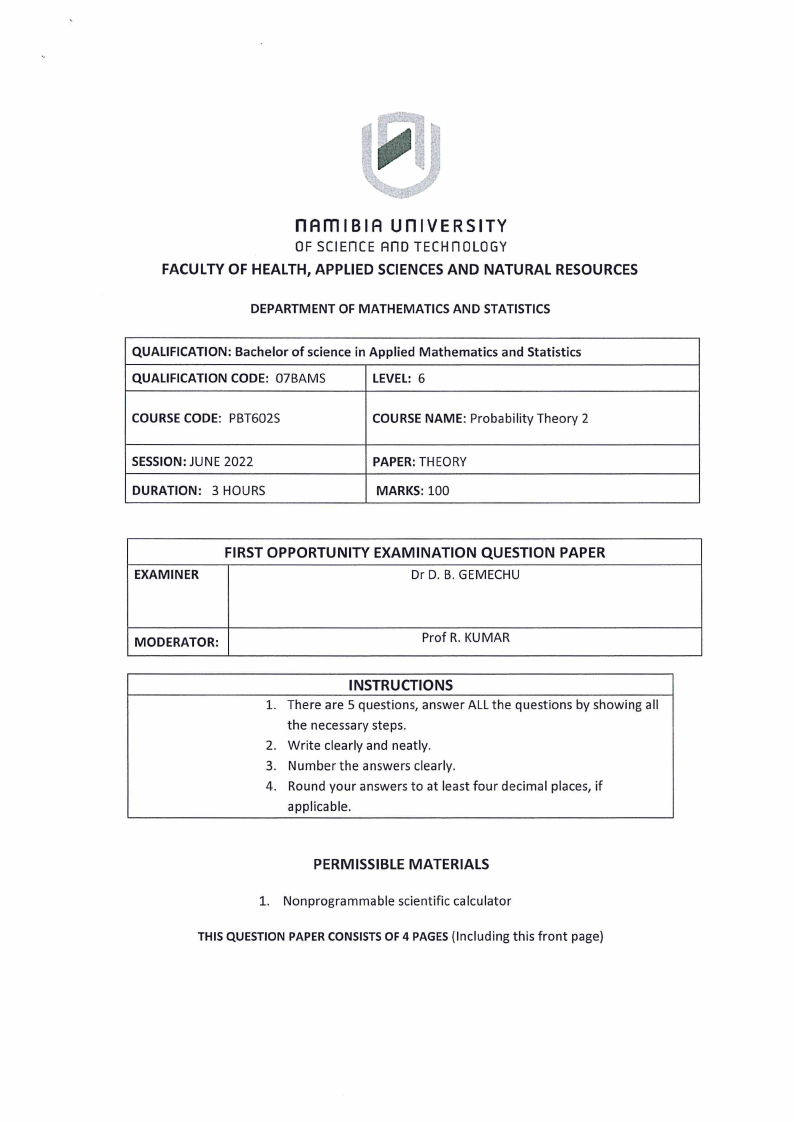

NAMIBIA UNIVERSITY

OF SCIENCE AND TECHNOLOGY

FACULTY OF HEALTH, APPLIED SCIENCES AND NATURAL RESOURCES

DEPARTMENT OF MATHEMATICS AND STATISTICS

QUALIFICATION: Bachelor of science in Applied Mathematics and Statistics

QUALIFICATION CODE: 07BAMS

LEVEL: 6

COURSE CODE: PBT602S

COURSE NAME: Probability Theory 2

SESSION: JUNE 2022

DURATION: 3 HOURS

PAPER: THEORY

MARKS: 100

EXAMINER

FIRST OPPORTUNITY EXAMINATION QUESTION PAPER

Dr D. B. GEMECHU

MODERATOR:

Prof R. KUMAR

INSTRUCTIONS

1. There are 5 questions, answer ALL the questions by showing all

the necessary steps.

2. Write clearly and neatly.

3. Number the answers clearly.

Round your answers to at least four decimal places, if

applicable.

PERMISSIBLE MATERIALS

1. Nonprogrammable scientific calculator

THIS QUESTION PAPER CONSISTS OF 4 PAGES (Including this front page)

|

2 Page 2 |

▲back to top |

Question 1 [12 marks]

1.1. Define the following terms:

1.1.1. Probability function

[3]

1.1.2. Power set

[1]

1.1.3. o-algebra

[2]

1.1.4. Consider an experiment of rolling a die with six faces once.

1.1.4.1. Show that the set a(X) = {9,S, {1,2,4}, {3,5,6}} is a sigma algebra, where S

represents the sample space for a random experiment of rolling a die with six

faces.

[3]

1.1.4.2. Givenaset Y = {(1,2,3.5), {4}, {6}}, then generate the smallest sigma algebra, a(Y)

that contains a set Y.

[3]

Question 2 [24 marks]

2A, Let X be a continuous random variable with p.d.f. given by

x+1, for-1<x<0O,

f(x) {ins for0<sx<1l1,

0,

otherwise.

Then find cumulative density function of X

[7]

2:2: Suppose that the joint CDF of a two dimensional continuous random variable is given by

F. x; = 1—e-*-—eY¥+e"F*), ? ifx>0; ’ y>0 ’

xv (%y) 0,

otherwise.

Then find the joint p.d.f. of X and Y.

[4]

2.3. Consider the following joint pdf of X and Y.

[7]

_ (2, x>0; y>0; x+y<1,

fay) = {0 elsewhere.

2.3.1. Find the marginal probability density function of f(y)

[2]

2.3.2. Find the conditional probability density function ofX given Y = y, fy(x|lY = y)

[2]

2.3.3. Fi, nd P(x <>|1], Y =-1 )

[3]

2.4. Let Y;,¥2, and Y3 be three random variables with E(Y,) = 2, E(¥2) = 3, E(¥3) = 2, of, = 2,

OF, = 3; OF, = 1, OY, Y, = —0.6, Oy, Y, = 0.3, and OY, y, = 2.

2.4.1. Find the expected value and variance of U = 2Y, — 3Y, + Y3

[2]

2.4.2. IfW = Y, + 2Y3, find the covariance between U and W

[4]

Page 1 of 3

|

3 Page 3 |

▲back to top |

QUESTION 3 [27 marks]

3.1. Let X bea discrete random variable with a probability mass function P(x), then show that the

moment generating function of X is a function of all the moments 4; about the origin which

is given by

fai

t,

t2 ,

tk ,

My(t) = E(e JS lt eit ypbe te tee to

Hint: use Taylor's series expansion: e* = re, = i

[5]

3.2. Let X1,X2,...,X, be a random sample from a Gamma distribution with parameters @ and @, that

IS

Fmla,0) 1=tFepae* gs, 8 -%% fo>r0;ma>0; 6>0,

0

otherwise.

a

3.2.1. Show that the moment generating function of X; is given by Mx, (t) = (=)

[6]

3.2.2. Find the mean of X using the moment generating function of X.

[4]

3.3. Suppose that X is a random variable having a binomial distribution with the parameters n and p

(i.e., X~Bin(n, p))

3.3.1. Find the cumulant generating function of X and find the first cumulant.

Hint: My(t) = (1-p(1—e*))”

[4]

3.3.2. If we define another random variable Y = aX + b, then derive the moment

generating function of Y, where a and b be any constant numbers.

[3]

3.4. Let X and Y be two continuous random variables with f(x) and g(y) bea pdf ofX and Y,

respectively, then show that E[g(y)] = E[E[g(y)X]].

[5]

Question 4 [20 marks]

4.1. Suppose that X and Y are two independent random variables following a chi-square distribution

with m and n degrees of freedom, respectively. Use the moment generating function to show

m

thatX + Y~y2(m +n). (Hint: My(t) = (= y?).

(7]

4.2. If X~Poisson (A), find E(X) and Var(X) using the characteristic function of X.

4.2.1. Show that the characteristic function of X is given by by (t) = er(e"-D

[5]

4.2.2. Find E(X) and Var(X) using the characteristic function of X.

[8]

Page 2 of 3

|

4 Page 4 |

▲back to top |

QUESTION 5 [17 marks]

5.1. Let X, and X2 be independent random variables with the joint probability density function given

by

fA,X4,X2) %2)

_= fe

—(x1+X2)

0,

,

iiffotxh1er>wi0;s.e4 .x2 > 0,

Find the joint probability density function of Y, = X, + Xz, andY, = ee

[10]

1

2

5.2. Let X and Y be independent Poisson random variables with parameters A, and Az. Use the

convolution formula to show that X + Y isa Poisson random variable with parameter A, + Az.

[7]

=== END OF PAPER===

TOTAL MARKS: 100

Page 3 of 3